The Foundations of Moral Philosophy

The Trolley Problem, a classic ethical thought experiment, compels us to grapple with fundamental questions regarding moral responsibility and the justification of actions. It challenges conventional notions of right and wrong while exposing the intricate nature of ethical decision-making. Analyzing this dilemma necessitates exploring philosophical approaches such as utilitarianism and deontology, which present divergent views on individual rights versus collective welfare. These theoretical frameworks form an essential foundation for examining the moral quandaries inherent in the Trolley Problem.

Utilitarianism and the Calculus of Consequences

Utilitarianism, a results-oriented ethical theory, maintains that the optimal action maximizes overall happiness and minimizes suffering. When applied to the Trolley Problem, utilitarians would likely advocate for the choice that reduces total harm, even if it requires sacrificing one to save several. This perspective emphasizes aggregate outcomes, frequently resulting in decisions that contradict our instinctive moral compass. The scenario demands careful consideration of potential consequences and the relative value assigned to human lives.

Deontology and the Inherent Value of Persons

Contrasting with utilitarianism, deontological ethics focuses on moral duties and principles. From this viewpoint, deliberately causing harm to an individual - regardless of the potential benefits - violates fundamental ethical rules. This approach underscores the intrinsic worth of every person, independent of situational outcomes. The Trolley Problem starkly illustrates the tension between adhering to moral absolutes and pursuing optimal results.

The Role of Intent and Responsibility

The Trolley Problem frequently underscores how intention shapes ethical evaluations. Is redirecting a trolley morally equivalent to physically pushing someone onto the tracks, despite identical outcomes? The distinction in agency and purpose often leads to differing moral assessments, highlighting the nuanced nature of ethical accountability. This differentiation between motives and results proves crucial for comprehending the problem's complexity.

The Impact of Context and Uncertainty

While the Trolley Problem presents artificially simplified scenarios, real-world ethical decisions occur amid ambiguity and complexity. The specific circumstances surrounding a choice - including incomplete information and unpredictable consequences - frequently complicate moral judgments. Applying theoretical ethical frameworks becomes particularly challenging when dealing with messy, real-life situations. Moreover, the absence of complete data can obscure decision-making and lead to unintended outcomes.

Moral Intuition and Emotional Response

Initial reactions to the Trolley Problem typically involve visceral emotional responses. These instinctive feelings, shaped by personal values and experiences, significantly influence our ethical reasoning. Recognizing these emotional reactions proves essential for understanding the limitations of purely logical approaches to morality. Examining these gut responses helps illuminate the interplay between rationality and emotion in ethical decision-making.

The Trolley Problem and Contemporary Ethics

This enduring thought experiment remains valuable for examining ethical challenges across diverse fields including medicine, jurisprudence, and corporate governance. The profound moral questions it raises underscore the perpetual need for rigorous ethical analysis. Insights gained from studying the Trolley Problem can inform approaches to complex moral issues in modern society.

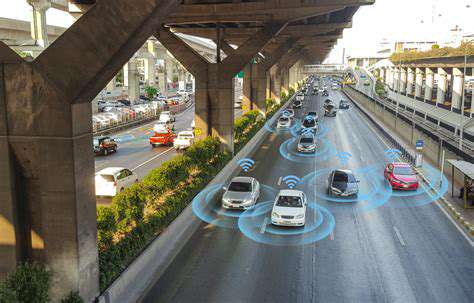

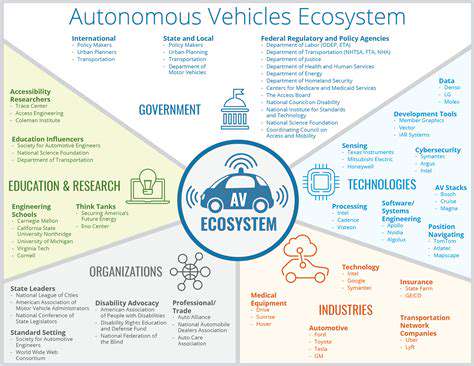

Data Bias and Equity in Autonomous Vehicle Decision-Making

Understanding Data Bias

Data bias in autonomous systems denotes systematic distortions within the training datasets powering these technologies. Such bias can originate from multiple sources: unrepresentative data samples, flawed collection methodologies, or even prejudiced algorithm design. Identifying and correcting data bias proves critical for guaranteeing fair and impartial outcomes from autonomous systems, as skewed data can produce discriminatory or unreliable results. For example, if an autonomous vehicle's training data primarily features urban environments with specific demographic characteristics, its performance may degrade when operating in different settings.

The consequences of data bias can be far-reaching. It may manifest as minor inaccuracies or escalate into systemic discrimination affecting areas like employment, lending, or public safety. Resolving this issue demands more than technical solutions - it requires addressing broader societal inequities. A holistic approach must consider both the technical dimensions of data processing and the ethical implications of deploying autonomous systems in diverse communities.

Ensuring Equity in Autonomous Systems

Achieving equitable autonomous systems necessitates proactive measures to counteract inherent data biases. This involves comprehensive strategies spanning data acquisition, preprocessing, and algorithmic development. Collecting data from varied populations and scenarios remains paramount for creating representative training sets.

Developing rigorous evaluation criteria represents another crucial step. These metrics should assess not just accuracy but also fairness across different demographic groups. Maintaining transparent and auditable systems proves essential for establishing trust and enabling bias detection. Such transparency facilitates identifying bias sources and implementing corrective measures.

Formulating ethical guidelines and regulatory frameworks constitutes another vital component. These standards should anticipate potential disparate impacts across different population segments and prevent discriminatory outcomes. Considering possible bias manifestations across various applications - from medical diagnostics to autonomous transportation - proves essential for developing equitable technologies.

Continuous monitoring and refinement remain critical for sustaining equitable performance. This involves regular assessments across diverse user groups and environments, with adjustments made to address emerging biases. The quest for equity in autonomous systems represents an ongoing commitment requiring persistent attention to ensure these technologies benefit all members of society.

The Role of Human Oversight and Accountability

The Importance of Human Judgment

Human oversight serves as a critical safeguard for ensuring AI systems operate ethically and responsibly. Humans possess unparalleled capabilities for contextual understanding, emotional intelligence, and nuanced judgment that current AI cannot replicate. These qualities prove indispensable for situations requiring complex ethical reasoning or adaptation to unanticipated circumstances. Human supervision provides a vital check against AI errors or undesirable outcomes.

Moreover, human oversight helps align AI systems with societal values and priorities. This alignment grows increasingly important as AI permeates sensitive domains like healthcare, criminal justice, and financial services.

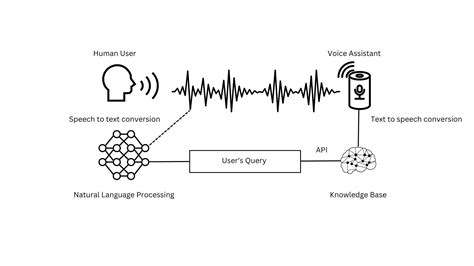

Understanding the Limitations of AI

While AI excels at processing vast datasets and identifying patterns, it often lacks human-like comprehension and common sense. This cognitive gap can result in flawed decisions when AI encounters novel situations or complex social contexts. For instance, AI trained on historical data may inadvertently perpetuate systemic biases unless properly monitored. Human oversight helps identify and correct such limitations.

Ensuring Accountability and Transparency

Human oversight mechanisms establish clear accountability when AI systems err. This accountability proves fundamental for building public trust in AI applications. Additionally, human involvement can enhance system transparency, making AI decision processes more interpretable to end-users.

AI transparency remains crucial for ensuring decisions are explainable and justifiable, particularly in high-stakes domains affecting people's lives. Understanding how and why AI reaches specific conclusions enables better oversight and continuous improvement.

Addressing Bias and Fairness

Since AI systems learn from historical data, they risk perpetuating existing societal biases. Human oversight plays a pivotal role in detecting and mitigating such biases. By scrutinizing training data and system outputs, human reviewers can identify and address unfair or discriminatory patterns.

Through vigilant monitoring and intervention, human oversight helps prevent AI from reinforcing societal inequities, ensuring these technologies serve all community members fairly.

Promoting Ethical Development and Deployment

Human oversight proves essential for guiding ethical AI development and responsible implementation. Incorporating human values and ethical considerations into AI design helps ensure these powerful technologies benefit humanity. This involves establishing ethical guidelines, creating public engagement mechanisms, and developing governance frameworks.

Proactive human oversight can anticipate potential negative consequences and develop mitigation strategies. This forward-looking approach helps align AI development with societal well-being and human values.