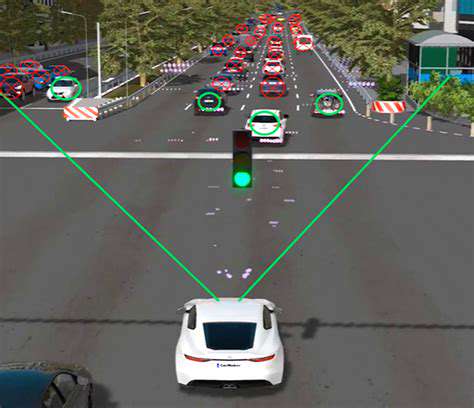

Autonomous vehicles rely on a multitude of sensors to perceive their surroundings, including cameras, lidar, radar, and ultrasonic sensors. Each sensor provides unique information, but also possesses limitations. For example, cameras excel at capturing visual details but struggle in low-light conditions. Lidar, while providing precise distance measurements, can be affected by weather, and radar is susceptible to reflections and clutter. Sensor fusion, the process of combining data from multiple sensors, is crucial for overcoming these individual limitations and creating a comprehensive and robust understanding of the environment. This holistic view is essential for safe and reliable autonomous driving, allowing the vehicle to navigate complex scenarios with accuracy and confidence.

The combination of diverse sensor data allows for a more complete and accurate picture of the environment. This leads to improved object detection, classification, and tracking. For instance, a camera might detect a pedestrian, while radar could provide information about their speed and trajectory. By combining these data streams, the vehicle can accurately assess the pedestrian's intentions and react appropriately, potentially preventing collisions or other hazardous situations.

Overcoming Sensor Limitations through Fusion

Individual sensors have inherent limitations that can significantly impact the reliability of autonomous driving systems. Poor weather conditions, such as heavy rain or fog, can drastically reduce the effectiveness of cameras and lidar. Similarly, radar signals can be affected by obstacles or reflections, leading to inaccurate readings. Sensor fusion mitigates these issues by leveraging the strengths of each sensor. By integrating data from multiple sources, the system can compensate for the weaknesses of individual sensors, providing a more accurate and reliable perception of the surrounding environment, crucial for safety and maneuverability in dynamic and challenging conditions.

Furthermore, sensor fusion algorithms can improve the robustness of the perception system. By combining redundant information, the system can reduce the impact of erroneous or missing data from a single sensor. This redundancy is paramount in ensuring reliable autonomous operation, particularly in complex and unpredictable environments. In essence, sensor fusion acts as a safety net, enhancing the system's ability to navigate diverse scenarios effectively and safely.

Real-World Applications and Benefits of Sensor Fusion

The benefits of sensor fusion extend beyond theoretical considerations. In real-world applications, sensor fusion allows autonomous vehicles to navigate complex urban environments, including intersections, parking lots, and crowded pedestrian areas. By combining the data from various sensors, the vehicle can accurately perceive the presence of multiple objects, their relative positions, and their intended movements. This comprehensive understanding is critical for safe decision-making and maneuverability in dynamic situations.

Furthermore, sensor fusion contributes to improved object recognition and classification. A fusion system can differentiate between different types of vehicles, pedestrians, and cyclists, even in challenging conditions. This enhanced object recognition is essential for safe navigation and interaction with other road users. The ability to accurately classify and categorize objects is critical in a complex environment, enhancing the system's ability to make informed decisions and react appropriately in diverse scenarios.

The Future of Sensor Fusion in Autonomous Driving

The future of sensor fusion in autonomous vehicles is bright, promising even more sophisticated and reliable systems. Ongoing research and development are focused on improving the accuracy and efficiency of fusion algorithms, potentially leading to faster processing speeds and enhanced decision-making capabilities. The integration of emerging sensor technologies, such as high-resolution cameras and more advanced radar systems, will further enhance the capabilities of sensor fusion systems.

As the technology evolves, sensor fusion will play an increasingly important role in ensuring the safety, reliability, and efficiency of autonomous vehicles. This development will not only improve the performance of autonomous vehicles but also contribute to the wider adoption of this transformative technology, paving the way for a future with safer and more intelligent transportation systems.

Improving Obstacle Detection and Avoidance with Ultrasonic Sensors

Improving Obstacle Detection Accuracy

Obstacle detection is a crucial aspect of autonomous navigation systems, ensuring safe and efficient operation. Accurate and reliable obstacle detection directly impacts the safety and performance of robots and self-driving vehicles. Current methods often struggle with complex environments, such as those with cluttered or dynamic obstacles. Improving the accuracy of detection algorithms is paramount for the widespread adoption of autonomous technologies. This requires a multifaceted approach, considering factors like sensor technology, data processing, and machine learning models.

One significant challenge lies in the ability to differentiate between real obstacles and spurious detections. Environmental factors, such as shadows or reflections, can confound sensor readings, leading to false positive detections. Sophisticated algorithms are needed to effectively filter out these spurious detections and identify only genuine obstacles. Robust feature extraction and classification methods are essential for achieving high precision in obstacle detection, particularly in challenging scenarios.

Enhancements to Autonomous Navigation

Improved obstacle detection directly translates into enhanced capabilities for autonomous navigation. Increased accuracy in obstacle detection leads to more confident and precise path planning, enabling robots and vehicles to navigate complex environments with greater safety and efficiency. This is crucial for applications like warehouse automation, delivery services, and even planetary exploration.

Furthermore, the ability to detect and react to dynamic obstacles, such as pedestrians or moving vehicles, is critical for safe interaction. Real-time processing of sensor data is essential to enable timely responses to changing situations. Advanced algorithms and robust sensor fusion techniques can significantly improve the responsiveness and safety of autonomous systems.

Reliable obstacle detection is fundamental to the overall safety and reliability of autonomous systems. By enhancing detection accuracy, we pave the way for widespread adoption of autonomous technology in various sectors, from manufacturing to transportation.

Integrating multiple sensor modalities, such as cameras, LiDAR, and radar, can provide a more comprehensive understanding of the environment and potentially reduce false positives. This holistic approach can significantly improve the overall performance of obstacle detection systems. Robust algorithms for sensor fusion and data integration are necessary to combine the strengths of different sensor types.

The successful implementation of these techniques will lead to more reliable and safer autonomous systems, opening up exciting possibilities for various applications.

Robust and adaptable obstacle detection algorithms are crucial for enabling the safe and efficient navigation of autonomous systems in diverse environments. This adaptability includes handling different lighting conditions, weather patterns, and varying levels of environmental clutter.