Object Detection Fundamentals

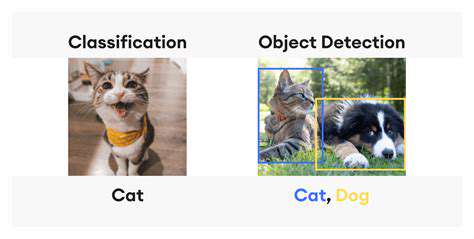

Object detection is a computer vision technique that locates and classifies objects within an image or video. It's a crucial component in various applications, from autonomous vehicles to medical image analysis. This process involves identifying the presence of specific objects, like cars, pedestrians, or even tumors, and pinpointing their precise location within the visual data.

Understanding the key concepts behind object detection is essential for comprehending its broader applications. A thorough grasp of these fundamentals will allow you to evaluate the efficacy and limitations of different object detection models.

Deep Learning Architectures for Object Detection

Various deep learning architectures are employed in object detection, each with its strengths and weaknesses. Convolutional Neural Networks (CNNs) are a cornerstone of modern object detection systems, enabling the extraction of hierarchical features from images. These features are crucial for distinguishing different object classes.

One particular architecture, the Region-based Convolutional Neural Network (R-CNN), paved the way for significant advancements in this field. Subsequent developments like Faster R-CNN and YOLO (You Only Look Once) further optimized speed and accuracy, significantly impacting real-time applications.

Data Preparation and Annotation

High-quality datasets are critical for training robust object detection models. Properly annotated data, where objects are precisely marked and classified, plays a pivotal role in model performance. Manual annotation can be time-consuming and expensive, but it ensures accuracy and reliability.

Object Classification Techniques

Object classification is a fundamental aspect of object detection. It involves assigning a category to detected objects, such as identifying a vehicle as a car, truck, or motorcycle. Various machine learning algorithms, including support vector machines (SVMs) and decision trees, can be employed for this task.

Accurate classification is essential for downstream tasks, enabling systems to make informed decisions based on identified objects. The accuracy of classification directly impacts the overall reliability of the object detection process.

Performance Metrics in Object Detection

Evaluating the performance of an object detection model is crucial. Common metrics include precision, recall, and F1-score. Precision measures the proportion of correctly identified objects among all detected objects, while recall measures the proportion of correctly identified objects among all actual objects present.

Understanding these metrics is essential for comparing different object detection models and selecting the most suitable one for a particular application. A high F1-score indicates a balance between precision and recall, signifying a model that effectively detects and classifies objects.

Applications of Object Detection

Object detection has a broad range of applications, impacting various industries. From self-driving cars to security systems, object detection is changing how we interact with technology. In healthcare, it aids in medical image analysis, assisting in the identification of anomalies and diseases.

Object detection is also transforming the way we interact with our surroundings, enabling more intelligent and responsive systems. The applications are continuously evolving, driving innovation and progress across diverse fields.

Challenges and Future Directions

Despite advancements, challenges remain in object detection. Issues such as handling diverse lighting conditions, complex backgrounds, and occlusions continue to impact accuracy. Future research focuses on developing more robust models that can effectively address these challenges.

Addressing these challenges is crucial for widespread adoption in real-world applications. Advancements in deep learning, along with improvements in data annotation techniques, hold the key to further progress in this exciting field.

Scene Understanding: Contextualizing the Driving Environment

Scene Understanding: Extracting Relevant Information

Scene understanding in computer vision for autonomous vehicles is a crucial aspect of perception. It involves processing visual data from cameras to extract meaningful information about the surrounding environment, including objects, their locations, and their relationships. This process goes beyond simply identifying objects like cars or pedestrians; it aims to comprehend the entire scene's context, such as the presence of traffic lights, road markings, and other vehicles' intentions. This contextual understanding allows the vehicle to make informed decisions, enabling safe and efficient navigation.

A key component of scene understanding is the ability to segment the scene into different regions of interest. This segmentation process isolates objects and background elements, enabling accurate object detection and classification. Sophisticated algorithms are employed to differentiate between static and dynamic elements, such as the road and moving vehicles, enabling the system to track and predict the behavior of objects in the scene. Accurate and real-time scene understanding is essential for autonomous vehicles to react appropriately to changing conditions and avoid potential hazards.

Contextualizing the Driving Environment for Decision Making

Beyond simply detecting objects, a robust scene understanding system needs to contextualize the driving environment. This involves interpreting the relationships between objects in the scene to understand their interactions and possible future actions. For example, understanding that a car is stopped at a red light, and that other cars are following behind, allows the system to anticipate the likelihood of a potential collision. This contextual information is paramount in enabling safe and intelligent decision-making by the autonomous vehicle.

Further contextualization includes predicting the behavior of other drivers and pedestrians. By analyzing their past actions and current context, the system can infer their intentions, such as whether a pedestrian is about to cross the street or if another vehicle is attempting to change lanes. This anticipatory understanding of the environment enables the autonomous vehicle to proactively adjust its trajectory and avoid potential conflicts, making the driving experience smoother and safer.

Environmental factors like weather conditions (rain, snow, fog) and lighting conditions must also be taken into account. By incorporating this environmental context, the system can adapt its behavior accordingly, increasing the accuracy of object detection and recognition, and ultimately improving the overall safety and reliability of the autonomous vehicle.

Scene understanding, therefore, goes beyond basic object recognition and aims for a comprehensive comprehension of the entire driving environment. This allows the vehicle to make informed decisions, anticipate potential hazards, and ensure safe and efficient operation.

The goal of contextualizing the driving environment is to create a comprehensive picture of what the vehicle's sensors are seeing and to use this information to make the best possible decisions in a timely manner, which greatly enhances the safety and reliability of autonomous driving.

Real-time Processing and Robustness: Ensuring Safe and Efficient Operation

Real-time Data Handling

Real-time processing is crucial for applications requiring immediate responses to incoming data streams. This involves the ability to analyze and act upon data as it arrives, without significant latency. Efficient algorithms and optimized infrastructure are paramount to achieve this. Delays can lead to missed opportunities, inaccurate results, and ultimately, system instability, highlighting the importance of real-time data handling in modern applications.

Robustness Against Data Errors

Systems must be designed with robustness in mind, ensuring they can withstand various types of data errors and anomalies. This includes handling missing values, outliers, and inconsistencies in the input data. Robust error handling mechanisms are essential to maintain the integrity and reliability of the results, preventing unexpected behavior and ensuring the system's continued operation in unpredictable environments. These mechanisms must be capable of adapting to fluctuations in data quality.

Fault Tolerance and Failover Mechanisms

In critical systems, fault tolerance is essential. This means the system must be able to continue operating even if components fail. Implementing failover mechanisms allows for seamless transition to backup systems or resources in case of component failures. This proactive approach ensures uninterrupted service and minimizes downtime, which is crucial in real-time systems where continuous operation is paramount. This is particularly important in mission-critical systems.

Data Validation and Filtering

Data validation and filtering are critical steps to ensure the quality and accuracy of the processed data. Validating data types, ranges, and formats helps prevent erroneous calculations and outputs. Filtering out irrelevant or noisy data improves the efficiency and effectiveness of the analysis process. These steps are not just about data hygiene, but about ensuring the reliability of the entire system.

Efficient Resource Management

Efficient resource management is vital for sustained real-time performance. Optimizing CPU usage, memory allocation, and network bandwidth is critical to maintaining responsiveness and preventing bottlenecks. Strategies for resource management, including load balancing and dynamic scaling, are necessary to accommodate fluctuating data volumes and system demands.

Security Considerations

Security is paramount in real-time processing systems, especially when dealing with sensitive data. Robust security measures are needed to protect data from unauthorized access, modification, or disclosure. Implementing encryption, access controls, and intrusion detection systems safeguards the integrity of the system and the data it handles, maintaining the confidentiality and privacy of the data.

Scalability and Adaptability

Real-time systems must be scalable to handle increasing data volumes and user demands. The ability to adapt to changing requirements and expand processing capacity is critical for long-term viability. Flexible architectures and modular designs are essential for adapting to future needs and evolving technologies. This adaptability ensures the system can continue to perform effectively as the environment evolves.