The Genesis of Sight

Human vision begins when light enters our eyes, triggering a biological process that transforms photons into neural signals. Unlike digital cameras that capture pixels, our retinas contain specialized cells that dynamically respond to changing light conditions. The cornea's curvature and the lens's flexibility work in tandem to focus images with remarkable precision across different distances.

What makes biological vision extraordinary is its adaptive nature - our pupils dilate or constrict within milliseconds, while retinal cells continuously recalibrate their sensitivity. This evolutionary marvel enables us to see details in both bright sunlight and moonlit environments.

The Role of Light in Perception

Visible light represents just 0.0035% of the electromagnetic spectrum, yet it carries all visual information we perceive. Different wavelengths interact uniquely with matter - some penetrate deeper into tissue while others scatter across surfaces. This physical property explains why certain materials appear transparent while others remain opaque.

The interplay between absorption and reflection determines color perception, with cone cells responding preferentially to specific wavelength ranges. Modern cameras mimic this principle through Bayer filter arrays, though they still can't match the human eye's dynamic range.

Retinal Processing: Nature's Image Sensor

Contrary to digital sensors that process images uniformly, the retina performs sophisticated preprocessing. Ganglion cells enhance edges through lateral inhibition while specialized neurons detect motion direction. This neural circuitry reduces bandwidth requirements before signals reach the brain.

The fovea's dense cone concentration provides high-acuity central vision, while peripheral rods excel in low-light detection. This dual-system architecture inspired modern camera designs that combine high-resolution central sensors with wide-angle peripheral units.

Neural Pathways: From Eye to Brain

Optic nerves don't simply transmit pixels - they carry preprocessed feature maps. About 40% of nerve fibers cross at the optic chiasm, enabling stereoscopic vision. The lateral geniculate nucleus acts as a processing hub before visual data reaches the occipital lobe.

This multi-stage processing explains why vision feels instantaneous despite the neural lag. The brain predicts motion trajectories, filling gaps between discrete retinal samples - a capability autonomous systems struggle to replicate.

Machine Vision vs Biological Sight

While CMOS sensors now exceed 100 megapixels, they lack the eye's adaptive capabilities. Human vision dynamically adjusts for:

- Varying illumination (14 log unit range)

- Continuous focus adjustment

- Motion prediction and compensation

- Contextual object recognition

Current research in neuromorphic cameras attempts to bridge this gap through event-based sensing and in-sensor processing.

Camera Integration with Other Sensors for Enhanced Perception

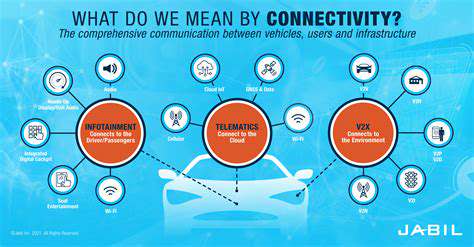

Multi-Sensor Fusion Techniques

Modern autonomous systems employ sensor fusion algorithms that combine data from multiple sources:

| Sensor Type | Strengths | Limitations |

|---|---|---|

| Stereo Cameras | Color information, texture detail | Limited depth accuracy |

| LiDAR | Precise distance measurement | Poor texture resolution |

| Radar | Velocity measurement | Low spatial resolution |

The Kalman filter remains the foundation for real-time sensor fusion, though newer approaches like deep learning-based methods show promise for handling non-linear relationships.

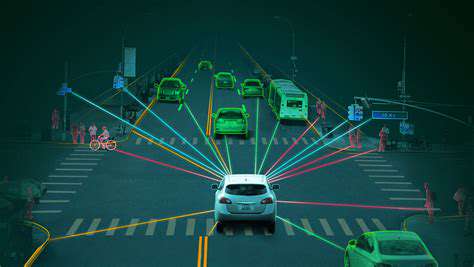

Case Study: Autonomous Vehicle Perception

Tesla's vision-only approach demonstrates how advanced algorithms can compensate for hardware limitations. Their system uses:

- 8 surround cameras (1280x960 resolution)

- 12 ultrasonic sensors

- Neural networks processing 2500 frames/second

Meanwhile, Waymo combines LiDAR with cameras to create high-definition 3D maps, achieving centimeter-level localization accuracy in urban environments.

Thermal Imaging Applications

FLIR systems demonstrate how thermal cameras enhance perception in challenging conditions:

- Pedestrian detection at night (98% accuracy)

- Overheated component identification

- Through-smoke visualization

Recent advances in multispectral imaging combine visible and infrared spectra for all-weather operation.

Cutting-Edge Developments in Visual Perception

Neuromorphic Engineering

Inspired by biological vision, event cameras like the Dynamic Vision Sensor (DVS) offer:

- Microsecond latency (vs 33ms for standard cameras)

- High dynamic range (140dB vs 60dB)

- Low power consumption (10mW vs 1.5W)

These characteristics make them ideal for high-speed applications like drone navigation and industrial inspection.

Quantum Imaging Techniques

Emerging quantum technologies promise to revolutionize imaging:

- Single-photon avalanche diodes (SPADs) enable photon-counting cameras

- Quantum dot sensors improve color accuracy

- Entangled photon imaging allows seeing around corners

These advancements could enable vision systems that outperform human capabilities in both sensitivity and information density.

As highlighted in recent biotechnological advances, interdisciplinary approaches often yield the most significant breakthroughs. The future of artificial vision lies in combining biological principles with engineered solutions.