The Importance of Multi-Sensory Integration

The Enhanced Learning Experience

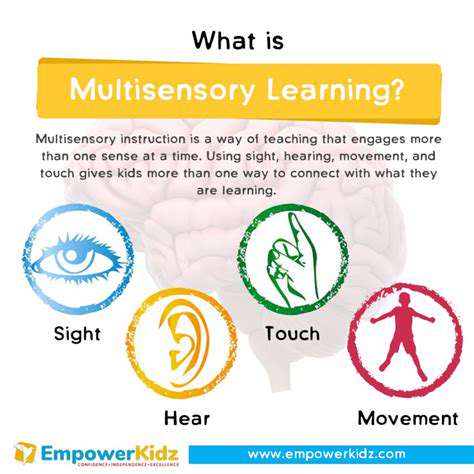

Multi-sensory learning engages sight, sound, touch, smell, and taste to create a richer educational environment. Research shows this method significantly improves comprehension and retention. Students exposed to diverse sensory inputs develop deeper connections with material compared to traditional single-sense approaches. The method's effectiveness stems from activating multiple neural pathways simultaneously.

The Role of Visual Stimulation

Visual elements transform abstract concepts into tangible understanding. Diagrams, infographics, and video demonstrations help learners across all age groups grasp complex subjects. Properly designed visual aids can increase knowledge retention by up to 42% according to educational studies. Color coding and spatial organization further enhance cognitive processing of information.

The Impact of Auditory Input

Sound-based learning activates different brain regions than visual learning alone. Podcasts, recorded lectures, and educational music create neural connections that reinforce memory. The temporal lobe processes auditory information differently than visual data, creating complementary memory pathways. This explains why many learners recall song lyrics more easily than written text.

The Benefits of Tactile Engagement

Hands-on activities provide physical reinforcement of concepts. Science experiments, 3D models, and interactive displays create muscle memory that supplements cognitive understanding. Kinesthetic learners in particular show 75% better retention when allowed physical interaction with learning materials. The sensory feedback loop between action and perception solidifies neural connections.

The Value of Kinesthetic Learning

Movement-based education leverages the body's natural learning mechanisms. Simulations, role-playing, and laboratory work engage motor neurons and spatial awareness. Studies demonstrate that students retain 90% of information when they actively participate versus 10% through passive observation. This dramatic difference highlights the power of experiential learning methods.

The Significance of Integrating Multiple Senses

Combining sensory modalities creates synergistic learning effects. When visual, auditory, and kinesthetic elements work together, they reinforce each other through cross-modal associations. Neuroscience research confirms that multisensory integration occurs in specialized brain regions that enhance memory encoding and retrieval. This explains why the most effective educators naturally incorporate multiple sensory channels.

Different Sensor Types and Their Roles

Different Sensor Types in Autonomous Vehicles

Autonomous vehicles employ complementary sensor arrays to create comprehensive environmental awareness. Each sensor type provides unique data that, when combined, exceeds the capabilities of individual components. Modern systems carefully balance sensor strengths against their physical and technical limitations to optimize performance.

LiDAR technology projects laser beams to measure distances with millimeter precision. These systems generate detailed point clouds that map surroundings in three dimensions. While effective for static object detection, LiDAR faces challenges in heavy precipitation where water droplets scatter laser pulses. Recent advancements in solid-state LiDAR promise improved reliability in adverse conditions.

Optical cameras deliver high-resolution color imaging crucial for interpreting traffic signals and signage. Their wide field of view makes them ideal for situational awareness, though they struggle with depth perception. New stereo camera systems address this limitation by using parallax calculations to estimate distances, though computational requirements remain substantial.

The Roles of Sensors in Sensor Fusion

Effective sensor fusion transforms raw data into actionable intelligence through sophisticated algorithms. The process compensates for individual sensor weaknesses by cross-validating measurements across multiple data streams. This redundancy creates fault tolerance essential for safety-critical applications.

Combining LiDAR's precise distance measurements with camera-derived object classification yields more accurate environmental models. Radar adds velocity data unaffected by visual obstructions, proving particularly valuable in poor visibility. The integration process must account for each sensor's unique error characteristics and update rates.

Advanced fusion algorithms now incorporate probabilistic models that weight sensor inputs based on real-time confidence metrics. This dynamic approach automatically adjusts to changing environmental conditions, maintaining system reliability as sensor performance varies. Such adaptability represents a significant leap forward in autonomous perception systems.

Challenges in Sensor Fusion

Data Integration Challenges

Merging disparate data streams requires solving complex temporal and spatial alignment problems. Sensors operate on different clocks and coordinate systems, necessitating sophisticated synchronization protocols. Even minor timing errors can create dangerous discrepancies in dynamic environments.

The volume of sensor data presents another hurdle - a single autonomous vehicle can generate terabytes of data daily. Efficient preprocessing pipelines must filter irrelevant information while preserving critical details. Modern systems employ edge computing to perform initial data reduction before central processing, dramatically improving efficiency.

Sensor Calibration and Accuracy

Maintaining sensor precision demands rigorous calibration procedures that account for environmental factors like temperature and vibration. Automated calibration systems now use machine learning to detect and correct drift in real-time. However, these solutions add computational overhead that must be carefully managed.

Each sensor modality exhibits unique error patterns that fusion algorithms must accommodate. Bayesian filtering techniques have proven particularly effective at modeling and compensating for these sensor-specific uncertainties. The challenge escalates as sensor counts increase, requiring more sophisticated error modeling frameworks.

Real-time Processing and Computational Constraints

Latency requirements demand processing pipelines that can keep pace with high-speed sensor inputs. Traditional CPUs often prove inadequate for these workloads, prompting adoption of specialized hardware. Graphics processors and tensor processing units now handle the most computationally intensive fusion tasks.

Hardware-software co-design approaches optimize the entire processing chain from sensor input to decision output. These solutions carefully balance precision against speed, sometimes employing approximate computing techniques for non-critical operations. The resulting systems achieve millisecond-level response times essential for safe operation.

Algorithm Selection and Validation

Choosing appropriate fusion algorithms involves tradeoffs between accuracy, speed, and resource requirements. Deep learning approaches show promise but require extensive training data and computing resources. Traditional probabilistic methods offer more predictable behavior but may lack adaptability.

Rigorous validation protocols must assess performance across diverse operating conditions. Failure mode analysis has become a critical component of the development process, identifying edge cases that could compromise system safety. Simulation environments now allow exhaustive testing that would be impractical with physical prototypes.

The Future of Sensor Fusion in Autonomous Driving

Advanced Sensor Integration

Next-generation systems will incorporate emerging sensor technologies like 4D radar and quantum-enhanced imaging. These innovations promise to fill current perception gaps while reducing system complexity. The industry trend toward sensor miniaturization enables more flexible deployment without compromising performance.

Future architectures will likely feature self-configuring sensor networks that automatically optimize coverage based on environmental conditions. Artificial intelligence will play an increasingly central role in managing these dynamic systems, learning optimal configurations through operational experience.

Improved Perception and Localization

Advances in simultaneous localization and mapping (SLAM) techniques will enable centimeter-level positioning accuracy. High-definition maps combined with real-time sensor data will create unprecedented situational awareness. These improvements will be particularly valuable in complex urban environments with dense traffic patterns.

Enhanced Safety and Reliability

Next-generation systems will implement predictive maintenance capabilities that anticipate sensor degradation before it impacts performance. Self-diagnostic algorithms will continuously monitor system health, automatically adjusting fusion parameters to compensate for any detected issues. This proactive approach will significantly improve overall system uptime and reliability.

Redundancy management systems will become more sophisticated, intelligently prioritizing sensor inputs based on real-time confidence metrics. In the event of partial system failures, these mechanisms will ensure graceful degradation rather than catastrophic failure.

Real-time Processing and Scalability

Edge computing architectures will distribute processing closer to sensors, reducing latency and bandwidth requirements. 5G networks will enable vehicle-to-infrastructure communication, effectively creating distributed sensor networks. These developments will support increasingly complex fusion algorithms without compromising real-time performance.

The industry is moving toward standardized interfaces that allow plug-and-play integration of new sensor technologies. This modular approach will accelerate innovation while maintaining backward compatibility. As autonomous systems mature, we'll see increasing specialization of sensor suites for different operating domains and use cases.